UNIT_3__DL[1]

Uploaded by

Prawin rajUNIT_3__DL[1]

Uploaded by

Prawin rajUNIT III

APPLICATIONS OF DEEP LEARNING TO COMPUTER VISION

Image segmentation - Object detection - Automatic image captioning - Image generation with

Generative adversarial networks - Video to text with LSTM models - Attention models for

computer vision tasks

1.Image segmentation

One of the most important operations in Computer Vision is Segmentation. Image

segmentation is the process of dividing an image into multiple parts or regions that belong to

the same class. This task of clustering is based on specific criteria, for example, color or

texture. This process is also called pixel-level classification. In other words, it involves

partitioning images (or video frames) into multiple segments or objects.

40 years, various segmentation methods have been proposed, ranging from MATLAB

image segmentation and traditional computer vision methods to the state of the art deep

learning methods. Especially with the emergence of Deep Neural Networks (DNN), image

segmentation applications have made tremendous progress.

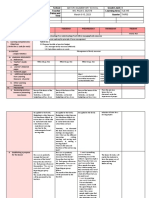

1.2Image Segmentation Techniques

There are various image segmentation techniques available, and each technique has its own

advantages and disadvantages.

1. Thresholding: Thresholding is one of the simplest image segmentation techniques, where

a threshold value is set, and all pixels with intensity values above or below the threshold

are assigned to separate regions.

2. Region growing: In region growing, the image is divided into several regions based on

similarity criteria. This segmentation technique starts from a seed point and grows the

region by adding neighboring pixels with similar characteristics.

3. Edge-based segmentation: Edge-based segmentation techniques are based on detecting

edges in the image. These edges represent boundaries between different regions and are

detected using edge detection algorithms.

4. Clustering: Clustering techniques group pixels into clusters based on similarity criteria.

These criteria can be color, intensity, texture, or any other feature.

5. Watershed segmentation: Watershed segmentation is based on the idea of flooding an

image from its minima. In this technique, the image is treated as a topographic relief,

where the intensity values represent the height of the terrain.

6. Active contours: Active contours, also known as snakes, are curves that deform to find

the boundary of an object in an image. These curves are controlled by an energy function

that minimizes the distance between the curve and the object boundary.

7. Deep learning-based segmentation: Deep learning techniques, such as Convolutional

Neural Networks (CNNs), have revolutionized image segmentation by providing highly

accurate and efficient solutions. These techniques use a hierarchical approach to image

processing, where multiple layers of filters are applied to the input image to extract high-

level features. Read more about the basics of a Convolutional Neural Network.

8. Graph-based segmentation: This technique represents an image as a graph and

partitions the image based on graph theory principles.

9. Super pixel-based segmentation: This technique groups a set of similar image pixels

together to form larger, more meaningful regions, called super pixels.

1.3 Applications of Image Segmentation

Image segmentation problems play a central role in a broad range of real-world

computer vision applications, including road sign detection, biology, the evaluation of

construction materials, or video security and surveillance. Also, autonomous vehicles and

Advanced Driver Assistance Systems (ADAS) need to detect navigable surfaces or apply

pedestrian detection.

Image segmentation is widely applied in medical imaging applications, such as tumor

boundary extraction or measurement of tissue volumes. Here, an opportunity is to design

standardized image databases that can be used to evaluate fast-spreading new diseases and

pandemics.

Deep Learning-based Image Segmentation has been successfully applied to segment

satellite images in the field of remote sensing, including techniques for urban planning or

precision agriculture. Also, images collected by drones (UAVs) have been segmented using

Deep Learning based techniques, offering the opportunity to address important environmental

problems related to climate change.

2.Object Detection

Object detection is an important computer vision task used to detect instances of

visual objects of certain classes (for example, humans, animals, cars, or buildings) in digital

images such as photos or video frames. The goal of object detection is to develop

computational models that provide the most fundamental information needed by computer

vision applications: “What objects are where?”.

2.1 Importance of Object Detection

Object detection is one of the fundamental problems of computer vision. It forms the

basis of many other downstream computer vision tasks, for example, instance and image

segmentation, image captioning, object tracking, and more. Specific object detection

applications include pedestrian detection, animal detection, vehicle detection, people

counting, face detection, text detection, pose detection, or number-plate recognition.

2.2 Object Detection and Deep Learning

The rapid advances in deep learning techniques have greatly accelerated the

momentum of object detection technology. With deep learning networks and the computing

power of GPUs, the performance of object detectors and trackers has greatly improved,

achieving significant breakthroughs in object detection.

Machine learning (ML) is a branch of artificial intelligence (AI), and it essentially

involves learning patterns from examples or sample data as the machine accesses the data and

has the ability to learn from it (supervised learning on annotated images). Deep Learning is a

specialized form of machine learning which involves learning in different stages.

2.3 How Object Detection works

Object detection can be performed using either traditional image processing techniques or

modern deep learning networks.

1. Image processing techniques generally don’t require historical data for training and are

unsupervised in nature. OpenCV is a popular tool for image processing tasks.

Pro’s: Hence, those tasks do not require annotated images, where humans labeled

data manually (for supervised training).

Con’s: These techniques are restricted to multiple factors, such as complex

scenarios (without unicolor background), occlusion (partially hidden objects),

illumination and shadows, and clutter effect.

2. Deep Learning methods generally depend on supervised or unsupervised learning, with

supervised methods being the standard in computer vision tasks. The performance is

limited by the computation power of GPUs, which is rapidly increasing year by year.

Pro’s: Deep learning object detection is significantly more robust to occlusion,

complex scenes, and challenging illumination.

Con’s: A huge amount of training data is required; the process of image

annotation is labor-intensive and expensive. For example, labeling 500’000

images to train a custom DL object detection algorithm is considered a small

dataset. However, many benchmark datasets (MS COCO, Caltech, KITTI,

PASCAL VOC, V5) provide the availability of labeled data.

2.4 Advantages and Disadvantages of Object Detection

Object detectors are incredibly flexible and can be trained for a wide range of tasks

and custom, special-purpose applications. The automatic identification of objects, persons,

and scenes can provide useful information to automate tasks (counting, inspection,

verification, etc.) across the value chains of businesses.

The main disadvantage of object detectors is that they are computationally very

expensive and require significant processing power. Especially, when object detection models

are deployed at scale, the operating costs can quickly increase and challenge the economic

viability of business use cases.

3. Automatic Image Captioning

Automatic image captioning using deep learning is an exciting area of research and

application that combines computer vision and natural language processing. The goal is to

develop algorithms and models that can accurately generate descriptive captions for images.

This technology enables machines to understand and describe the content of images in a

human-like manner.

The process typically involves a deep learning model, such as a convolutional neural

network (CNN) for image processing and a recurrent neural network (RNN) for generating

text. The CNN extracts relevant features from the image, while the RNN processes these

features and generates a coherent and contextually appropriate caption.

One common approach is to use a pre-trained CNN, like a variant of the popular

models such as VGG16 or ResNet, to extract features from the images. These features are

then fed into an RNN, often in the form of a Long Short-Term Memory (LSTM) network,

which is capable of learning sequential dependencies and generating captions word by word.

Training such models requires a large dataset of images paired with corresponding

captions, allowing the algorithm to learn the relationships between visual content and textual

descriptions. Popular datasets for this task include MS COCO (Microsoft Common Objects in

Context) and Flickr30k.

Automatic image captioning has various practical applications, including aiding

visually impaired individuals by providing detailed descriptions of images, enhancing image

search functionality, and facilitating content understanding in areas like social media and

healthcare.

3.1.Why Do We Need Automatic Image Captioning ?

Automatic image captioning serves several important purposes across various domains. Here

are some key reasons why this technology is valuable:

1. Accessibility for Visually Impaired Individuals:

Automatic image captioning enhances accessibility by providing detailed

descriptions of images. Visually impaired individuals can use this technology to

understand and interact with visual content on the internet, social media, and other

platforms.

2. Improved Image Search and Organization:

Image captioning facilitates more accurate and efficient image search. Users can

search for images based on textual descriptions, making it easier to find relevant

content and organize large image databases.

3. Content Understanding in Social Media:

Social media platforms generate massive amounts of image content. Automatic

image captioning helps in understanding and categorizing this content, improving

content moderation, and enhancing user experience by providing context to

images.

4. Assisting Cognitive Impaired Individuals:

For individuals with cognitive impairments, image captions can provide additional

context and aid in understanding visual information. This is particularly relevant

in educational settings and healthcare applications.

5. Enhancing Human-Machine Interaction:

In human-computer interaction scenarios, such as virtual assistants or robotics,

automatic image captioning enables machines to comprehend and respond to

visual stimuli. This is crucial for developing more intuitive and user-friendly

interfaces.

6. Facilitating Easier Content Creation:

Content creators and marketers can benefit from automatic image captioning by

quickly generating descriptive text for their visual content. This can save time and

effort in the content creation process.

7. Enabling Applications in Healthcare:

In medical imaging, automatic image captioning can assist healthcare

professionals in understanding and documenting radiological images. It has the

potential to streamline medical reporting and enhance collaboration between

medical experts.

8. Improving Assistive Technologies:

Automatic image captioning contributes to the development of advanced assistive

technologies, enhancing the capabilities of devices designed to assist individuals

with disabilities in their daily activities.

3.2 Application of Automatic image captioning

1. Content Moderation in social media:

Automatic image captioning is used in social media platforms to enhance content

moderation. It helps identify and filter inappropriate or harmful images by

analyzing their content and context through generated captions.

2. Image Search and Retrieval:

Image captioning improves the accuracy of image search engines. Users can

search for images using descriptive text, making it easier to find relevant content

in large image databases.

3. Assistive Technologies:

In robotics and other assistive technologies, automatic image captioning enables

machines to understand and respond to visual stimuli, making human-machine

interaction more intuitive and versatile.

4. E-learning and Educational Tools:

Automatic image captioning enhances educational materials by providing

descriptions for visual content. This is beneficial in e-learning platforms, making

educational resources more accessible and inclusive.

5. Medical Imaging and Healthcare:

Automatic image captioning assists healthcare professionals in understanding and

documenting medical images. It can improve the efficiency of radiological

reporting and contribute to better collaboration among medical experts.

6. Enhancing Human-Computer Interaction:

In human-computer interaction scenarios, such as virtual reality or augmented

reality applications, automatic image captioning helps computers understand the

visual environment and respond accordingly, improving the overall user

experience.

3.3 Disadvantages of Automatic image captioning

1. Ambiguity and Subjectivity:

Automatic image captioning may struggle with ambiguous or subjective images

where multiple interpretations are possible. Captions generated in such cases may

lack accuracy and precision.

2. Lack of Creativity:

Current models may generate captions that are factual but lack creativity. The

generated captions may be straightforward descriptions and might miss the

nuanced or imaginative aspects of an image.

3. Overfitting to Training Data:

Automatic image captioning models can overfit to the specific characteristics of

the training data, making them less effective when faced with images outside the

training set or in different contexts.

4. Difficulty in Handling Rare Scenarios:

Models may struggle to generate accurate captions for rare or unusual scenarios

that are not well-represented in the training data. This limitation can affect the

model's ability to handle diverse situations.

5. Limited Understanding of Context:

Captioning models may have difficulty understanding contextual information and

relationships between objects in an image. This can lead to captions that lack

coherence or fail to capture the overall context accurately.

4.Image generation with Generative adversarial networks

Generation with Generative Adversarial Networks (GANs) refers to the process of

creating realistic images using a class of artificial intelligence models known as Generative

Adversarial Networks.

Fundamental idea behind gans is to train two neural networks simultaneously: a

generator and a discriminator. These networks are engaged in a game-like scenario, hence the

term "adversarial."

4.1 Generator:

The Generator in a GAN is responsible for creating synthetic data, in this context,

realistic images. It takes random noise or a seed as input and transforms it into data that

should resemble the training data. The Generator's architecture is typically a neural network

that learns to map the input noise to the space of realistic images. Here are key aspects of the

Generator:

1. Architecture:

The Generator often employs a deep neural network architecture, such as a

convolutional neural network (CNN) or a deep feedforward network. This

architecture is designed to capture complex patterns and structures in the

training data.

2. Input:

The input to the Generator is typically a random noise vector sampled from a

simple distribution (like a Gaussian distribution). This input is the starting

point for generating diverse and realistic images.

3. Output:

The output of the Generator is an image. In the context of image generation,

the output layer often uses activation functions such as sigmoid or hyperbolic

tangent to ensure that the pixel values are within a valid range (e.g., [0, 1] for

normalized images).

4.2 Discriminator:

The Discriminator, on the other hand, plays the role of a critic. It evaluates whether a given

input is a real image from the training data or a synthetic image generated by the Generator.

Here are key aspects of the Discriminator:

1. Architecture:

Like the Generator, the Discriminator is often a neural network, commonly

a CNN. Its architecture is designed to process and classify images,

distinguishing between real and fake ones.

2. Input:

The Discriminator takes an image as input, either a real one from the

training dataset or a synthetic one generated by the Generator.

3. Output:

The output of the Discriminator is a probability score indicating the likelihood that

the input image is real. It typically uses a sigmoid activation function in the output

layer to produce a value between 0 and 1.

4.3 Image Generation with GANs:

1. Latent Space:

GANs operate in a latent space, a high-dimensional space where the generator learns

to map random noise to meaningful representations. This latent space captures the underlying

features of the data distribution.

2. Versatility:

GANs are versatile and can be applied to various types of data generation, including

images, art, and even text. In the context of image generation, GANs have been particularly

successful in creating high-quality, diverse, and realistic images.

3. Applications:

GANs find applications in numerous domains, including art creation, image-to-image

translation, style transfer, and data augmentation. They have been used to generate faces,

landscapes, and even novel designs.

4. Challenges:

Despite their success, GANs face challenges such as mode collapse (repetitive

generation of similar samples), training instability, and sensitivity to hyperparameters.

4.4. Challenges and Considerations

Mode Collapse:

GANs may suffer from mode collapse, where the generator produces limited

varieties of samples, ignoring certain modes in the data distribution.

Training Instability:

Training GANs can be challenging and may suffer from instability. Achieving

a balance between the generator and discriminator is crucial.

Evaluation Metrics:

Assessing the quality of generated samples is subjective, and choosing

appropriate evaluation metrics can be non-trivial.

Ethical Considerations:

GANs raise ethical concerns, particularly when used to create deepfake

images or other forms of synthetic content that can be maliciously exploited.

4.5 Applications of Generative Adversarial Networks (GANs):

1. Data Augmentation:

GANs are widely used for data augmentation in machine learning. By generating

synthetic data, GANs help improve the performance and generalization of models,

particularly when the available labeled dataset is limited. This application is valuable in

various domains, including image classification and object detection.

2. Super-Resolution Imaging:

GANs are employed to enhance the resolution of images. This is particularly useful in

medical imaging and satellite imagery, where obtaining high-resolution data is challenging.

GANs can generate detailed images from lower-resolution inputs, aiding in clearer

visualizations and analyses.

3. Deepfake Creation:

GANs have been controversially applied to create deepfake videos and images. By

leveraging the adversarial training process, GANs can manipulate facial features and

expressions, making it appear as if individuals are saying or doing things they never did. This

application has raised ethical concerns and highlighted the need for deepfake detection

technologies.

4. Text-to-Image Synthesis:

GANs are utilized in text-to-image synthesis, where textual descriptions are

transformed into corresponding images. This has applications in content creation,

storytelling, and design. GANs learn to generate images based on textual cues, enabling the

creation of visual content from natural language descriptions.

5.Video to text with LSTM models

The term "Video to Text with LSTM models" refers to a process where Long Short-Term

Memory (LSTM) models are employed to convert information from videos into textual

representations. Here's a breakdown of the key components:

1. Video to Text: This involves extracting meaningful information, such as spoken

words or actions, from a video and converting it into a textual format. This process is

essential for tasks like video captioning, summarization, or indexing.

2. LSTM Models: LSTM is a type of recurrent neural network (RNN) architecture

designed to capture and remember long-term dependencies in sequential data. In the

context of video-to-text conversion, LSTM models are employed to understand the

temporal relationships and dependencies present in video data.

Sequential Processing: Videos are sequences of frames, and LSTM models are

well-suited for processing sequential data. Each frame can be treated as a time

step, allowing the model to understand the temporal context.

Long-Term Dependencies: Traditional RNNs face challenges in capturing long-

term dependencies due to issues like vanishing gradients. LSTMs address this

problem by introducing memory cells and gates, enabling them to retain

information over extended periods.

Feature Extraction: LSTMs can be used to extract relevant features from video

frames, recognizing patterns and relationships that contribute to the understanding

of the video content.

Training: LSTM models are trained on labeled video data, where the input is

video frames, and the output is corresponding textual descriptions or labels. This

training process enables the model to learn the associations between visual content

and textual representations.

Overall, the combination of video-to-text conversion and LSTM models allows for the

creation of systems that can automatically generate textual descriptions or summaries of the

content within videos, making it easier to understand and analyze visual information.

5.1 working principle of video-to-text conversion with LSTM models involves several

key steps:

5.1.1 Preprocessing:

Video Segmentation: The input video is segmented into frames, treating each frame

as a time step in the sequence.

Feature Extraction: Relevant features, such as visual and audio features, may be

extracted from each frame to represent the content of the video.

Sequence Representation:The sequence of frames is fed into the LSTM model one

at a time, treating each frame as a sequential input.

5.1.2 LSTM Architecture:

Memory Cells: LSTMs have memory cells that store information over long

sequences. These cells help in retaining context and capturing dependencies between

different frames.

Gates: LSTMs have gates, including input gates, forget gates, and output gates, which

regulate the flow of information through the network. This enables the model to

selectively update and use information from previous time steps.

5.1.3Training:

The LSTM model is trained using labeled data, where the input is the sequence of

video frames, and the output is the corresponding textual representation or label.

During training, the model adjusts its parameters to minimize the difference between

its predicted output and the actual labeled output.

5.1.4 Temporal Understanding:

The LSTM model learns to understand the temporal dependencies and patterns within the

video sequence. It captures information about how the content evolves over time.

5.1.5 Output Generation:

After training, the LSTM model can be used to predict textual descriptions for new, unseen

video sequences.

The model takes a sequence of video frames as input and generates a corresponding sequence

of textual descriptions.

5.2 Applications of video-to-text conversion with LSTM models

1. Video Captioning:

Explanation: Video captioning involves automatically generating textual

descriptions or captions for the content within a video. LSTM models are utilized

to understand the sequential nature of video frames and their temporal

dependencies. The model learns to associate visual features with corresponding

textual descriptions, enabling the generation of informative captions.

Example: In a video of a cooking tutorial, the model can generate captions like

"Chopping vegetables," "Simmering the sauce," providing a textual narrative of

the cooking process.

2. Content Summarization:

Explanation: Content summarization entails creating concise and informative

textual summaries of longer videos. LSTM models process the sequence of

frames, capturing key information and temporal relationships. The model learns to

distill the essential elements of the video, producing a summarized textual

representation.

Example: For a news broadcast, the model could generate a summary like "Top

stories include political updates, weather forecast, and sports highlights" based on

the content of the video.

3. Surveillance and Security:

Explanation: In surveillance applications, LSTM models analyze video footage to

automatically extract relevant information in textual form. The model can be

trained to recognize and describe specific activities or events, contributing to

efficient security monitoring.

Example: The model could identify and report suspicious activities in a

surveillance video, such as "Person entering restricted area" or "Unattended

baggage detected."

4. Educational Tools:

Explanation: LSTM models can enhance educational videos by automatically

generating transcripts or subtitles. This facilitates accessibility for individuals with

hearing impairments and provides a searchable index for educational content. The

model understands the temporal context to create accurate textual representations.

Example: In an online lecture video, the model generates subtitles like "Professor

discussing key concepts in physics" or "Graph displayed illustrating a

mathematical formula."

5.3 Drawbacks associated with video-to-text conversion using LSTM models:

1.Computational Complexity:

Explanation: Training and deploying LSTM models for video-to-text conversion can

be computationally demanding. LSTM networks, being a type of recurrent neural

network (RNN), involve complex computations across sequential data. Processing

video frames sequentially and capturing temporal dependencies require significant

computational power. This complexity can impede real-time applications and may

necessitate the use of powerful hardware, such as GPUs or TPUs, for efficient model

training and inference.

Impact: Limited scalability and real-time performance, especially in resource-

constrained environments, can be a significant challenge.

2.Data Requirements:

Explanation: LSTM models, like many deep learning architectures, rely on large

amounts of labeled data for effective training. Obtaining diverse and annotated video

datasets can be challenging and time-consuming. The quality and representativeness

of the training data directly impact the model's ability to generalize to unseen

scenarios.

Impact: Insufficient or biased training data can lead to suboptimal model

performance and may result in the model struggling to accurately describe diverse

video content.

3. Difficulty in Handling Diverse Content:

Explanation: LSTM models may encounter difficulties when faced with diverse and

complex video content. Videos containing multiple objects, fast-paced scenes, or

unexpected events can challenge the model's ability to accurately capture and describe

the content. The model might struggle to discern relevant information or provide

coherent textual representations in such scenarios.

Impact: Inaccurate or incomplete descriptions may limit the model's applicability in

environments with diverse and dynamic visual content.

4. Ambiguity in Interpretation:

Explanation: LSTM models may face challenges in handling ambiguous situations or

contexts where multiple interpretations are possible. Video content with unclear visual

cues or scenes that can be interpreted in different ways may lead to the model

generating ambiguous or inaccurate textual descriptions.

Impact: In applications requiring precise and unambiguous descriptions, such as

security or critical decision-making contexts, the model's inability to handle

ambiguity can be a limitation.

6. Attention models for computer vision tasks

Attention mechanisms in deep learning serve the crucial purpose of allowing models

to selectively focus on pertinent information within input data, addressing limitations

associated with uniform processing. By assigning varying weights to different regions or

features, attention mechanisms enhance relevance and context understanding. They facilitate

spatial and channel localization, aiding tasks like object detection, and provide interpretable

representations through attention maps. These mechanisms are versatile, finding applications

in image classification, object detection, image captioning, and natural language processing.

Furthermore, attention enables models to handle variable-length inputs effectively. Overall,

attention mechanisms contribute to adaptive, context-aware, and efficient deep learning

models, improving their performance across a spectrum of tasks and fostering interpretability.

6.1Purpose of Attention:

Attention mechanisms aim to improve the processing of visual information by allowing the

model to dynamically focus on relevant parts of an image, rather than processing the entire

image uniformly.

6.2 Key Components:

6.2.1Query, Key, and Value Pairs:

Definition: In attention mechanisms, each input to the mechanism is represented as a set of

three vectors: a query vector (Q), a key vector (K), and a value vector (V).

Query (Q):

Represents the information that needs to be compared with the keys.

It is often derived from the input data and transformed into a query

vector.

Key (K):

Represents the information against which the queries are compared.

It helps determine the relevance or importance of different parts of the

input.

Value (V):

Represents the actual information or content associated with the input.

The values are used to compute the weighted sum, which becomes the

output of the attention mechanism.

6.2.2Spatial and Channel Attention:

Spatial Attention: Focuses on specific spatial locations within an image, allowing the

model to attend to important regions.

Channel Attention: Emphasizes specific channels or features, enabling the model to

prioritize relevant information across different feature maps.

6.3Types of attention models

6.3.1Spatial Attention:

Idea:

Spatial attention focuses on specific spatial regions within an input, allowing the

model to selectively attend to different parts of an image.

Application:

In tasks like object detection or image segmentation, spatial attention is crucial. The

model can dynamically allocate more attention to relevant objects or regions of

interest, improving accuracy in recognizing and localizing objects.

6.3.2Channel Attention:

Idea:

Channel attention emphasizes specific channels or feature maps within the input,

allowing the model to prioritize important features.

Application:

In scenarios where certain channels carry more discriminative information, such as

distinguishing between different object categories in an image, channel attention

proves beneficial. It helps the model focus on the most relevant channels during

feature extraction.

6.3.3Multi-Head Attention:

Idea:

Multi-head attention involves using multiple sets of queries, keys, and values in

parallel. Each set operates as an independent attention head.

Application:

This approach enhances the model's capacity to capture diverse relationships and

dependencies within the input. By attending to different aspects of the input

simultaneously, multi-head attention is particularly useful in tasks like natural

language processing and image understanding, where capturing various aspects of

context is essential.

6.3.4Cross-Modal Attention:

Idea:

Cross-modal attention allows the model to attend to information from different

modalities (e.g., visual and textual).

Application:

In tasks involving multiple sources of information, such as image-text matching or

visual question answering, cross-modal attention is employed. The model can attend

to relevant parts of both visual and textual inputs, facilitating a more comprehensive

understanding of the relationship between different modalities.

6.4 Applications of attention models in various domains

1. Machine Translation:

Application:

Attention models are widely used in machine translation tasks, where the goal

is to translate text from one language to another.

Explanation:

In a sequence-to-sequence model for machine translation, attention

mechanisms help the model focus on relevant words in the source language when

generating each word in the target language. This allows the model to consider the

context of the entire input sequence, improving translation accuracy, especially for

long sentences with complex structures.

2. Image Captioning:

Application:

Attention models play a crucial role in image captioning, where the goal is to

generate descriptive captions for images.

Explanation:

When generating a caption for an image, attention mechanisms enable the

model to focus on different regions of the image while describing specific objects or

scenes. This results in more contextually relevant captions, as the model dynamically

adjusts its attention to visually salient parts of the image, improving the overall

quality of generated descriptions.

3.Object Detection:

Application:

Attention models are applied in object detection tasks to improve the

localization of objects within an image.

Explanation:

In object detection, attention mechanisms help the model selectively focus on

relevant regions where objects are present. This enables more accurate localization

and classification of objects in complex scenes. By attending to specific spatial

regions, the model can better handle scenarios with multiple objects or varying sizes,

contributing to improved object detection performance.

4. Speech Recognition:

Application:

Attention models are used in automatic speech recognition (ASR) systems to

transcribe spoken language into text.

Explanation:

In speech recognition, attention mechanisms assist the model in aligning

phonemes or sound segments with corresponding words. By dynamically focusing on

relevant parts of the input audio sequence, attention helps improve the accuracy of

transcriptions, especially in the presence of variations in speech patterns, accents, or

background noise. This adaptability to varying temporal patterns enhances the

robustness of speech recognition systems.

You might also like

- Image Segmentation For Object Detection Using Mask R-CNN in ColabNo ratings yetImage Segmentation For Object Detection Using Mask R-CNN in Colab5 pages

- Lecture 13 Image Segmentation Using Convolutional Neural NetworkNo ratings yetLecture 13 Image Segmentation Using Convolutional Neural Network9 pages

- Visual Sensor Network: Exploring the Power of Visual Sensor Networks in Computer VisionFrom EverandVisual Sensor Network: Exploring the Power of Visual Sensor Networks in Computer VisionNo ratings yet

- Image Segmentation Using Deep Learning: A SurveyNo ratings yetImage Segmentation Using Deep Learning: A Survey23 pages

- A Brief Survey and An Application of SemNo ratings yetA Brief Survey and An Application of Sem38 pages

- A Study On Image Categorization TechniquesNo ratings yetA Study On Image Categorization Techniques7 pages

- Lecture 8 Image Segmentationi n Computer Vision 2025No ratings yetLecture 8 Image Segmentationi n Computer Vision 202518 pages

- UNIT 2 COMPUTER VISION & IMAGE PROCESSSING (1)No ratings yetUNIT 2 COMPUTER VISION & IMAGE PROCESSSING (1)16 pages

- Admin,+4554 Article+Text 17736 2 10 20210928No ratings yetAdmin,+4554 Article+Text 17736 2 10 2021092813 pages

- Computer Vision in Aritificial IntelligenceNo ratings yetComputer Vision in Aritificial Intelligence33 pages

- Object Detection and Image Recognition: KeywordsNo ratings yetObject Detection and Image Recognition: Keywords11 pages

- Deep Learning For Object Detection and Segmentation in Videos Toward An Integration With Domain KnowledgeNo ratings yetDeep Learning For Object Detection and Segmentation in Videos Toward An Integration With Domain Knowledge15 pages

- Influence of Technology Dependence On The Students of Grade 11 Thea in System Plus College FoundationNo ratings yetInfluence of Technology Dependence On The Students of Grade 11 Thea in System Plus College Foundation36 pages

- Business Correspondence in English and ArabicNo ratings yetBusiness Correspondence in English and Arabic6 pages

- Multiple-Layer Networks Backpropagation AlgorithmsNo ratings yetMultiple-Layer Networks Backpropagation Algorithms46 pages

- Level of Satisfaction of Senior High School Students On Modular Learning 1No ratings yetLevel of Satisfaction of Senior High School Students On Modular Learning 16 pages

- Salinan Dari The Next 8760 HOURS WORKSHEETNo ratings yetSalinan Dari The Next 8760 HOURS WORKSHEET17 pages

- Promoting Discipline-Specific Genre Competence With Corpus-Based Genre Analysis ActivitiesNo ratings yetPromoting Discipline-Specific Genre Competence With Corpus-Based Genre Analysis Activities17 pages